Co-designing AI for Education

Written by Dr Jeremy Knox

When new technologies become available to society, they are often accompanied by tremendous interest in how they might be used and lots of speculation about how our lives might become better, or worse.

The introduction of so-called generative AI in recent years is a typical example, and amongst the many areas of society predicted to be impacted, education is often close to the centre of the speculation. However, like many examples of technology before it, AI has been designed and produced with very specific kinds of expertise, by a relatively miniscule population of highly trained technical developers. This outsized societal impact has attracted increasing attention, and as is the case with AI, many are asking whether and how the huge diversity of societal perspectives can be accommodated by a technology designed by so few.

Where commercial AI products are being heavily promoted in public education, there are pressing questions about how young people can have a voice in making real decisions about a technology that seems positioned to greatly impact their future. This is where collaborative design, or ‘co-design’, has been gaining increasing attention, suggesting a range of methods through which different kinds of people can become involved in the design process, not just technical experts.

The project ‘Co-designing AI for Education’ sought to engage with these methods by developing an interactive game through which young people can learn about the impact of technology on education, as well as understand ways of participating in design and having their voices heard.

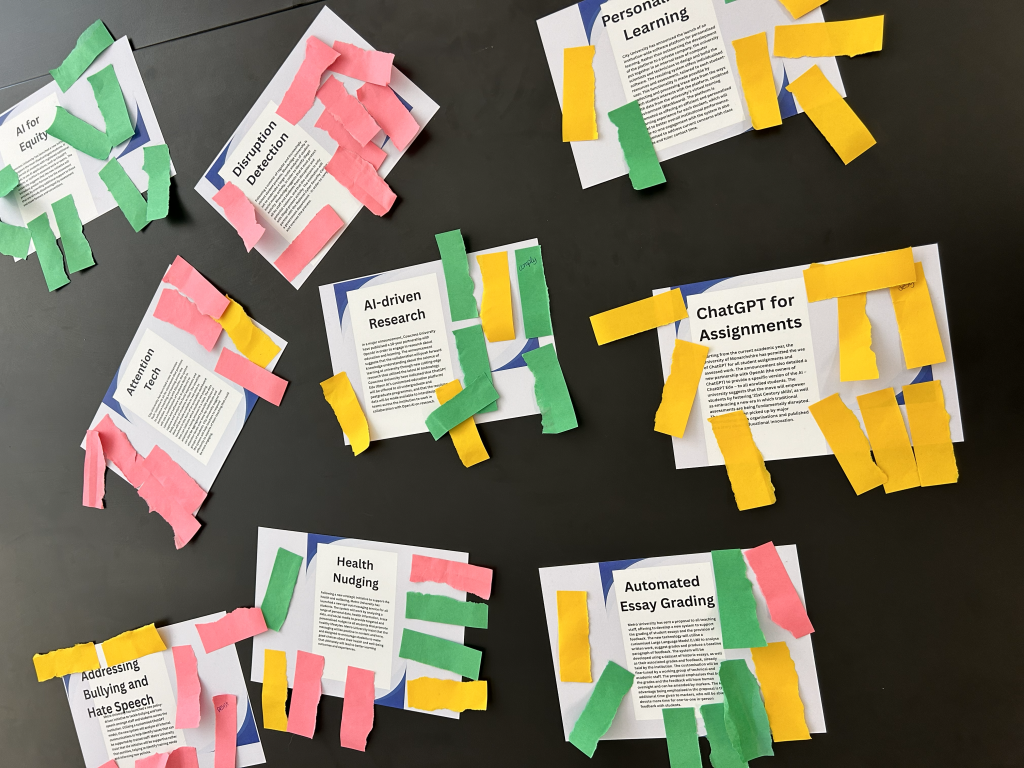

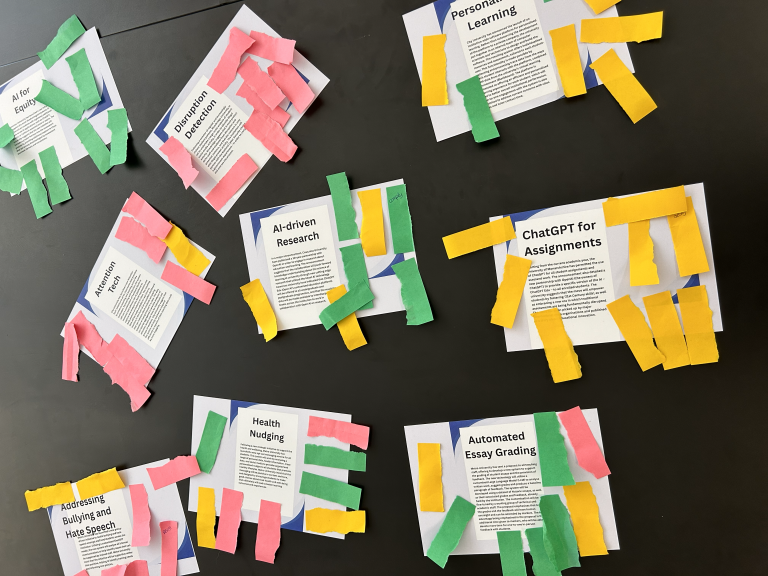

Developed by Dr Jeremy Knox and Dr Isobel Talks, the paper-based game consists of three stages, each of which in intended to encourage groups of participants to interact, exchange, discuss and debate issues related to the use of AI in education.

The game invites participants to discuss a range of fictional future scenarios involving the use of technology in education. These include ‘automated essay grading’ and ‘AI detection of hate speech’, which, while fictional, are intended to suggest both advantages and disadvantages to the use of the technology in education, in ways that encourage debate across a diversity of opinion. The task at this stage of the game is for participants to vote for the scenarios that they think can be made better through co-design processes.

The game also helps participants to understand the role of stakeholders – in this context a broad definition of the people that should have a say in, or that might be impacted directly or indirectly by, the particular technology in question. In education, stakeholders might be teachers, students, parents, policymakers, and technology designers, and so on. Participants are tasked with not only identifying potential stakeholders, but also suggesting which voices might be given priority in a particular scenario. For example, participants might be encouraged to discuss what kind of stakeholders should be particularly listened to when designing an AI that can detect hate speech.

The third and final stage is intended to help participants understand what kind of methods, techniques, or approaches might be used in co-design, such as ‘storyboarding’, ‘roleplaying’, or ‘data mapping’. Participants are tasked with discussing how specific methods might benefit a particular scenario, for example through surfacing the lived experiences of users or visualising how data might be collected and managed.

Through piloting the game with postgraduate students, the project team were able to refine aspects of the game design, as well as gather data about how young people approach questions and dilemmas about technology adoption in education. We found that, rather than being simply passive about the use of technology, or indeed wholly optimistic about the future of AI, students expressed nuanced views that attested to the difficulty of decision-making about how and why such technologies might be used in education. Moreover, our data demonstrated a diversity of views that challenges the idea of a straightforward or uniform ‘student voice’. Rather, students often expressed distinct or contrasting views that, at least on the surface, seemed challenging to reconcile.

These findings indicate a need to not only listen to students, but also to provide the structural means to accommodate a diversity of views, as well as to resist the temptation to hastily summarise and resolve student perspectives. Rather, students appeared to acknowledge and accept the ambivalence surrounding a technology such as AI, and a willingness to debate and exchange in response.

This, we argue, reflects a key aspect of collaboration, and shows how young people can be included as authentic participants in the ways educational institutions make decisions about AI in the future.